Database

Database support is only available in Crawlab Pro.

By default, Crawlab will save the collected data to the default database (MongoDB). Yet Crawlab aims at being a multipurpose web crawler management platform, which means it can be used to manage crawlers for various purposes. Therefore, it is designed to be flexible and can be easily integrated with mainstream databases, so that you can store your scraped data in the database of your choice.

The Database module in Crawlab is similar to some database management tools like DBeaver or DataGrip.

Supported Databases

Crawlab currently supports the following databases:

We are working on adding more database support, including but not limited to:

Database Management

Crawlab provides a centralized database management interface, where you can manage your databases and data collections.

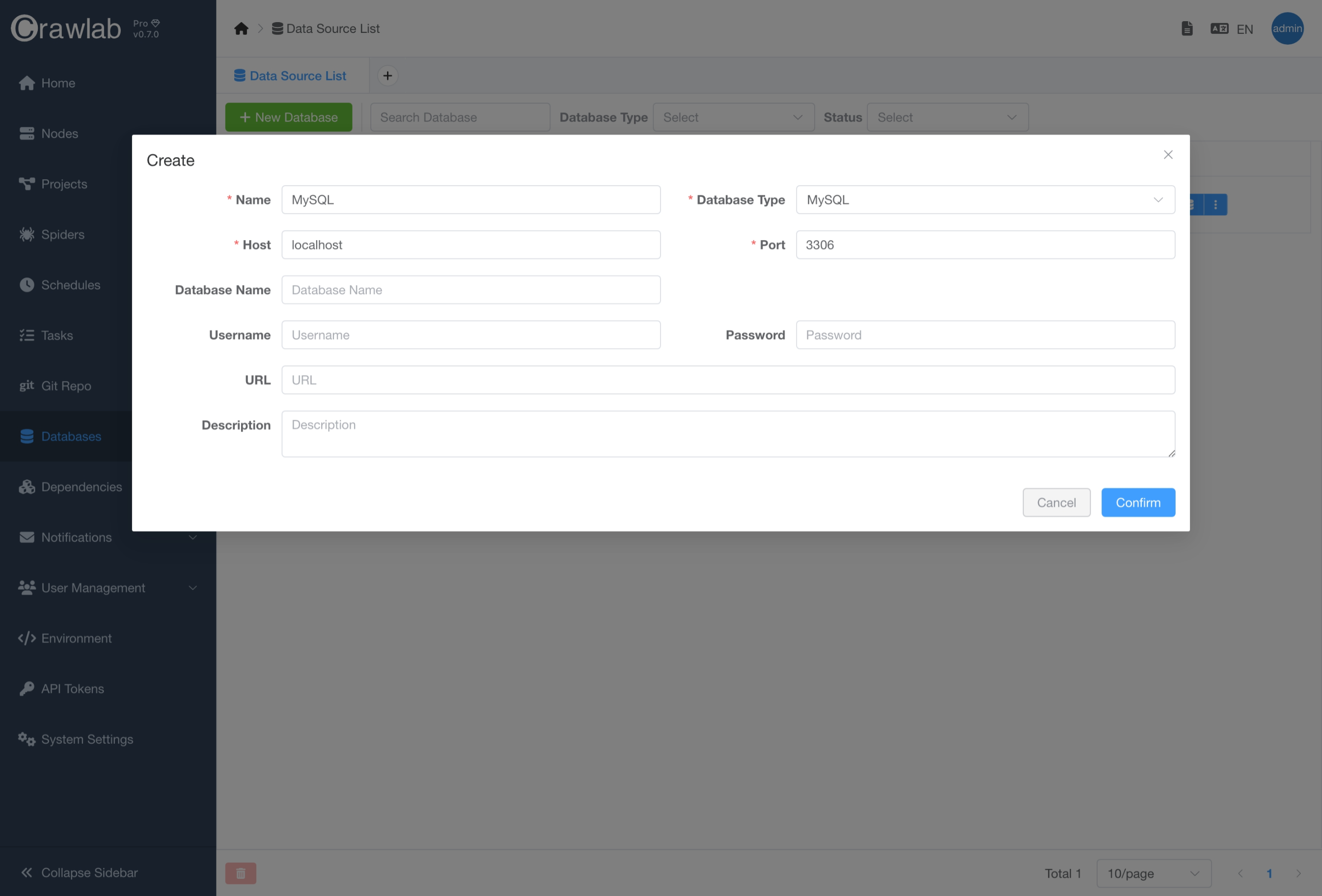

Create Database

You can create a new database by clicking the New Database button in the database management interface and entering

the database connection information.

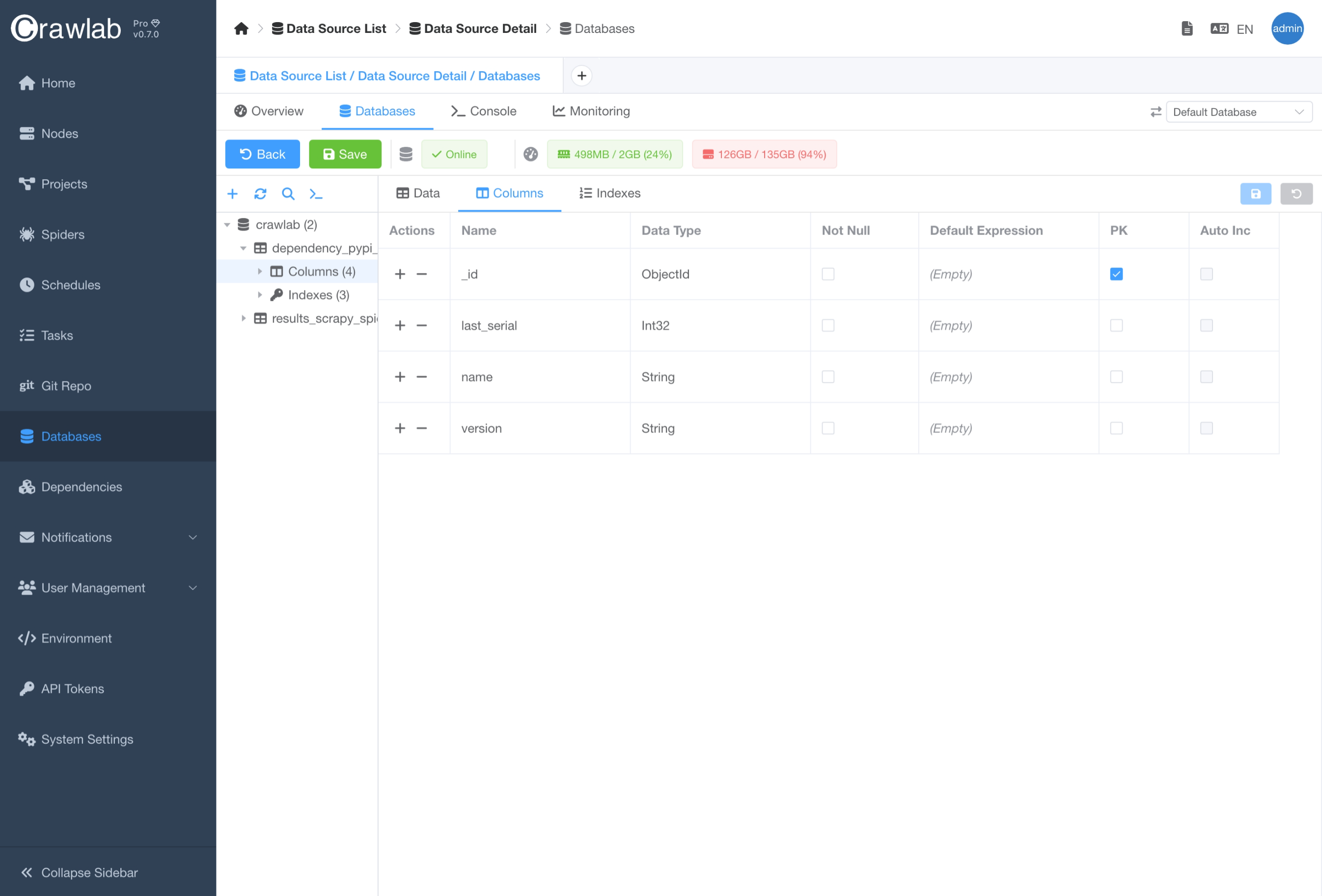

Update Database Schema

You can alter the database schema like adding, updating or deleting tables in the Databases tab in the Database

detail page.

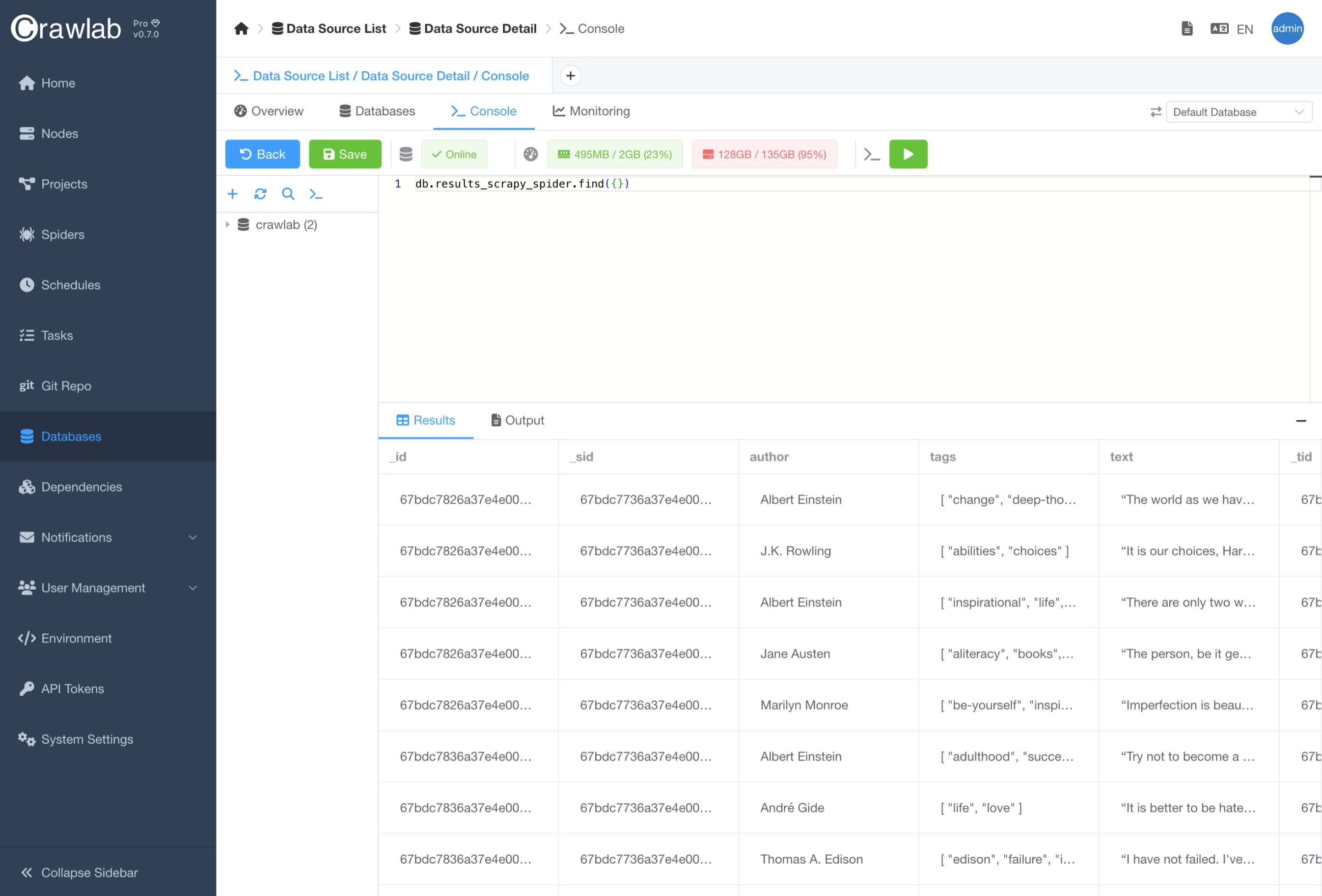

Execute Query

You can also execute database queries in the Console tab in the Database detail page. This is useful when you want

to test your queries before running them in your code.

Connect to Database

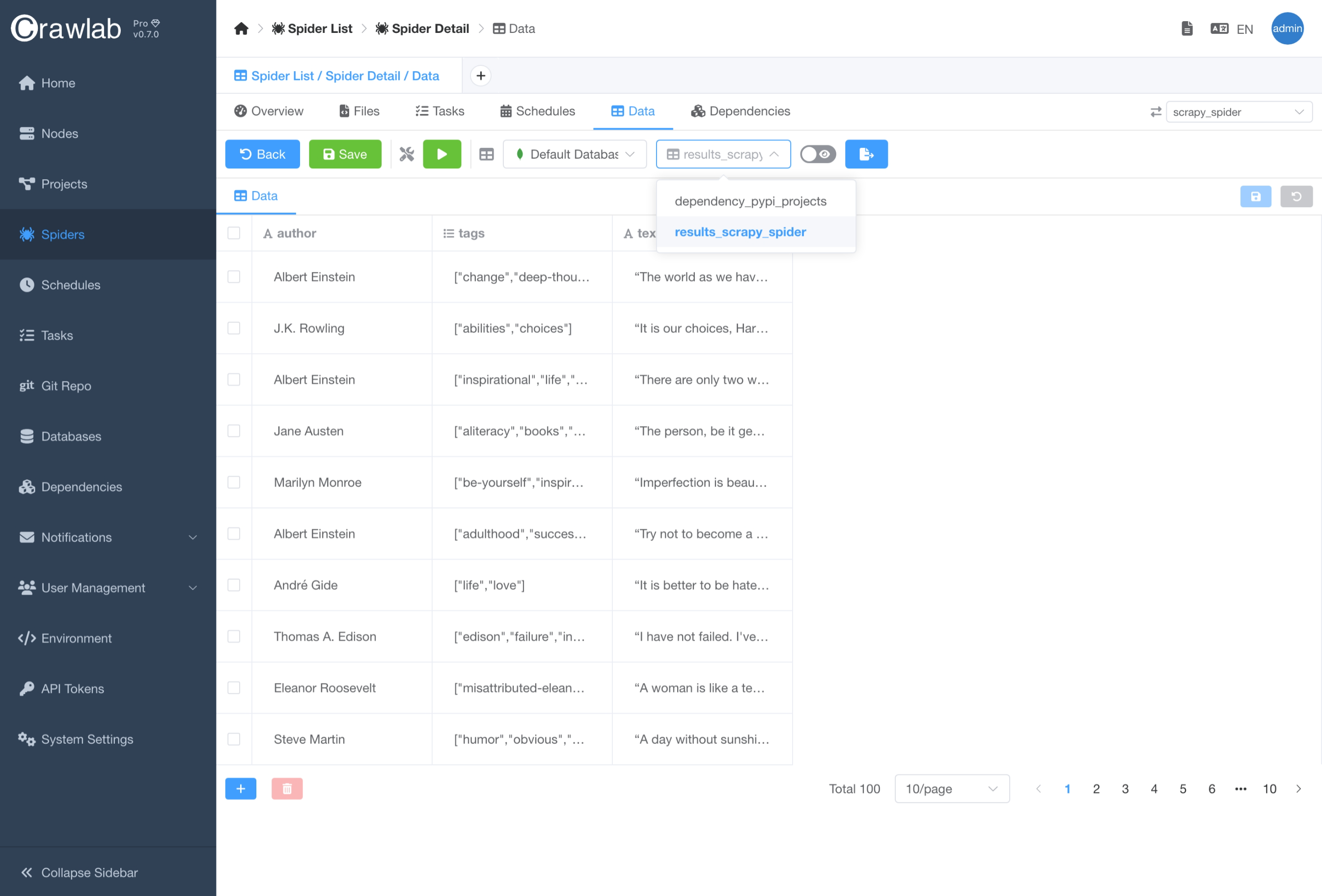

In the Data Integration section, you have already learnt how to use Crawlab SDK to store data to Crawlab so that you can preview scraped data.

The Database module is designed to be a centralized database management interface, where you can easily manage your

databases

and tables. In addition, you can select the database you would like to store your scraped data to in the Spider detail

page.

Follow the steps below to connect to a database.

- Create a new database in the

Databaseslist page. - Navigate to the

Spiderdetail page. - Navigate to the

Datatab. - Choose the database and table for storing scraped data.